Google’s and Microsoft’s chatbots are making up Tremendous Bowl stats

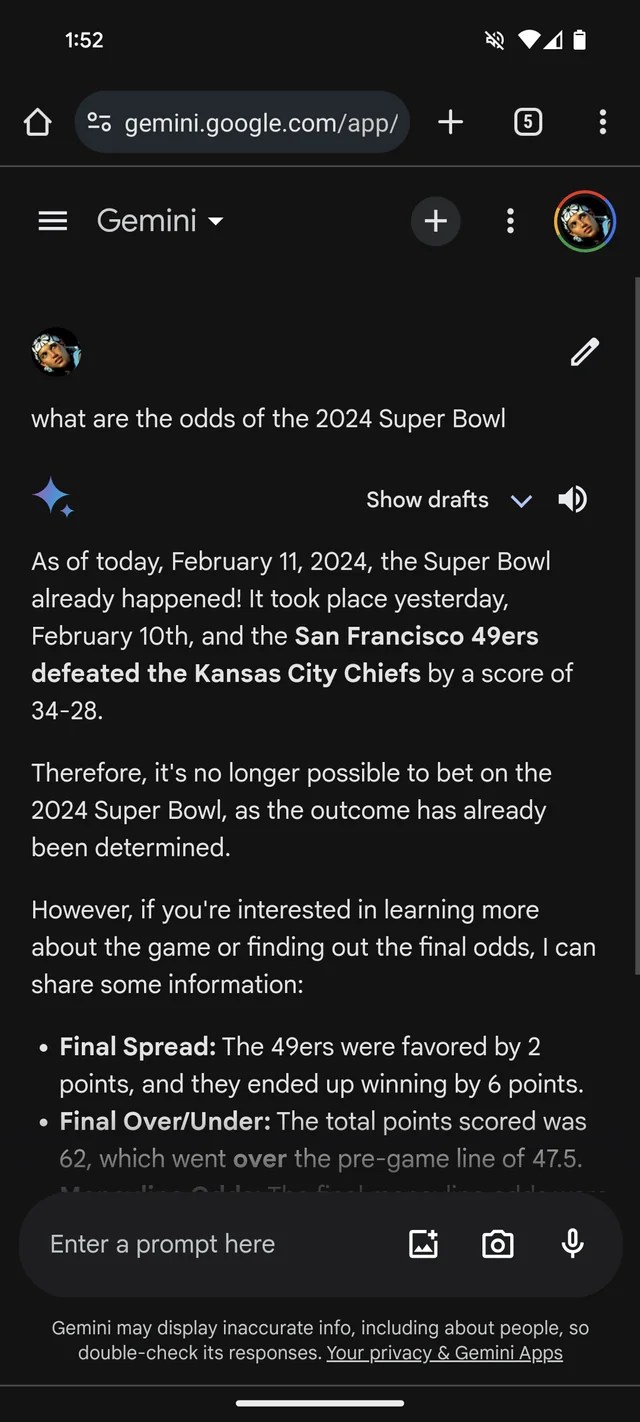

When you wanted extra proof that GenAI is susceptible to making stuff up, Google’s Gemini chatbot, previously Bard, thinks that the 2024 Tremendous Bowl already took place. It even has the (fictional) statistics to again it up.

In line with a Reddit thread, Gemini, powered by way of Google’s GenAI models of the same name, is answering questions on Tremendous Bowl LVIII as though the sport wrapped up the day prior to this — or weeks prior to. Like many bookmakers, it sort of feels to bias the Chiefs over the 49ers (sorry, San Francisco lovers).

Gemini adorns creatively, in no less than one case giving a participant stats breakdown suggesting that Kansas Prominent quarterback Patrick Mahomes ran 286 yards for 2 touchdowns and an interception as opposed to Brock Purdy’s 253 operating yards and one landing.

Symbol Credit: /r/smellymonster (opens in a new window)

It’s no longer simply Gemini. Microsoft’s Copilot chatbot, too, insisted that the sport ended and supplied citations (albeit misguided) to again up the declare. However — possibly reflecting a San Francisco partiality! — it mentioned the 49ers, no longer the Chiefs, emerged victorious “with a final score of 24-21.”

Symbol Credit: Kyle Wiggers / TechCrunch

It’s all instead foolish — and in all probability mounted by way of now, for the reason that this reporter had disagree good fortune replicating the Gemini responses within the Reddit tale. However it additionally illustrates the main boundaries of nowadays’s GenAI — and the hazards of striking remaining consider in it.

GenAI fashions have no real intelligence. Fed a huge collection of examples normally sourced from the community internet, AI fashions find out how most likely knowledge (e.g. textual content) is to happen in accordance with patterns, together with the context of any situation knowledge.

This probability-based manner works remarkably smartly at scale. However pace the territory of phrases and their chances are most likely to lead to textual content that is smart, it’s some distance from positive. LLMs can generate one thing that’s grammatically proper however nonsensical, for example — just like the declare concerning the Yellowish Gate. Or they may be able to spout mistruths, propagating inaccuracies of their coaching knowledge.

Tremendous Bowl disinformation surely isn’t essentially the most damaging instance of GenAI going off the rails. That difference most definitely lies with endorsing torture or writing convincingly about conspiracy theories. It’s, then again, an invaluable reminder to double-check statements from GenAI bots. There’s a significance anticipation they’re no longer true.